|

Bulletin of the Technical Committee on Learning Technology (ISSN: 2306-0212) |

|

Authors:

Wen-Hsiu Wu *1![]()

![]() , Guan-Ze Liao 1

, Guan-Ze Liao 1![]()

![]()

Abstract:

Technology is more than a tool; the use of technology is also a skill that needs to be developed. Teachers are expected to have the ability to integrate technology into their teaching methods, but whether they have the required technological expertise is often neglected. Therefore, technologies that can be easily used by teachers must be developed. In this study, an algorithm was developed that integrates Google Sheets with Line to offer teachers who are unfamiliar with programming a quick method for constructing a chatbot based on their teaching plan, question design, and the material prepared for a reading class. To meet the needs for reading classes, reading theories and effective reading instruction are incorporated into the learning and teaching mechanism of the chatbot system. To create a guidance structure that is suitable for students of various levels of ability, Nelson’s multipath digital reading model was employed because it can maintain a reading context while simultaneously responding to the diverse reading experiences of different readers.

Keywords: Educational technology, Learning management systems, Mobile learning

I. INTRODUCTION

According to [1], the use of technological tools to supplement teaching and learning activities can help students access information efficiently, develop self-directed learning in students, and improve the quality of instruction. However, many teachers continue to adhere to traditional teaching methods rather than integrating technology into teaching because of their negative attitude toward and low confidence in technology use; insufficient professional knowledge and technological competence; and a lack of technological resources, training, and support [2], [3]. Therefore, this study focused on the development of a system to cater to teachers’ technological abilities and teaching needs.

In this study, a reading class was considered the target context. In accordance with the theory of reading comprehension, Kintsch’s single-text [4] and Britt’s multitext [5] reading comprehension models were integrated into the learning mechanism design. To provide assistance to students on the basis of the level of comprehension with which they have difficulty, Nelson’s multipath reading model was employed to design the question and answer mechanism [6].

To make the system easily operable and accessible for teachers who lack a programming background, Line, which is the most used communication platform in Taiwan, was used as the front-end interface, and Google Sheets, a commonly used cloud-based spreadsheet, was employed as the database containing teaching content and learning records. Moreover, programs and algorithms were developed using Google App Script to connect the Line and Google Sheets services.

A. Models of Reading Comprehension

According to Kintsch’s reading comprehension model, which is called the construction–integration model, reading comprehension is a process of continuous construction and integration [4], [7]. In this model, each sentence in a text is transformed into a semantic unit, which is called a proposition. The reader then constructs a coherent understanding by continually recombining these propositions in an orderly fashion. Reference [8] reviewed studies on single-text processing and assumed that the reading process involves at least three levels of memory representation. The surface level represents decoding of word meaning in the early reading stage. The textbase level represents the process of transforming a text into a set of propositions on the basis of lexical knowledge, syntactic analysis, and information retrieved from memory. The situation level represents the process of constructing a coherent understanding of the situation described in the text through experience accumulated in life.

The main limitation of a single text is that it only reflects the viewpoint of a specific author rather than offering the comprehensive viewpoints. Even when arguments are objectively summarized in a literature review, the author still selects from among original sources. According to [5] and [9], if students are to address an issue critically and know how to construct a complete understanding of an issue, they should be allowed to learn by reading actual texts, practice selecting and organizing information, and interpret thoughts in their own manner. In multitext reading, texts have the role of providing raw information; reader must thus be clear on the purpose to their reading if they are to select and integrate relevant information and manage diverse or even contradictory viewpoints; otherwise, they may become lost in the ocean of information. Britt et al. extended the Kintsch model to propose the documents model and suggested that a higher level of proposition is event related and includes several clauses and paragraphs; this level involves understanding construction in multitext reading [5], [10]. Reference [8] reviewed studies on multitext reading and concluded that the reading process involves at least three memory representations: the integrated model represents the reader’s global understanding of the situation described in several texts, the intertext model represents their understanding of the source material, and the task model represents their understanding of their goals and appropriate strategies for achieving these goals. Compared with Kintsch’s theory, multitext reading theory is more reader-directed and emphasizes the reader’s approach to constructing a coherent and reasonable understanding from texts representing various viewpoints.

As suggested in [8], the challenge faced in the teaching of multiple-document reading is how to design a guidance structure that considers the reading paths of different students. Nelson proposed a digital reading model that can maintain a context and simultaneously respond to the diverse reading experiences of different readers. Nelson suggested breaking a text into smaller units and inserting hyperlinks in these units, allowing readers to jump from the current document to the content pointed to by the hyperlinks without affecting the structure of the text [6]. Moreover, reference [11] used Nelson’s model in a clear manner by treating reading units as nodes, interunit relationships as links, and reading experience as a network composed of nodes and links. Therefore, the collection of content with which the reader interacts can be treated as a representation of the reader’s reading process. Nelson’s multipath digital reading model inspired us to shift the complex teacher–student interaction during reading instruction to a chatbot system. Learning content can be considered a node, and question–answer pairs can be considered links to related learning content. If question–answer pairs fully represent students’ understanding, the students can be guided to the content they require on the basis of the answer they select. The following section explains the factors that must be accounted for within a well-designed question–answer framework.

B. Design of Questions and Instructions

Two particular reading interventions are employed to promote comprehension: an instructional framework based on self-regulated learning targets, which is used for basic-level comprehension, and a framework based on teacher-facilitated discussion targets, which is employed for high-level comprehension and critical–analytic thinking [12]. Among interventions for teacher-facilitated discussion, questioning differs from direct explanation and strategic interventions, which help students develop reading comprehension through direct transfer of skills. Instead, questioning, involving asking students questions in a step-by-step manner, helps them actively construct and develop their understanding of a text.

A good question does not always come to mind easily; thus, teachers must prepare well before class. According to [13], before designing questions, teachers must have a general understanding of the text, consider probable student reactions, possess specific thinking skills, and decide which competencies should be evaluated. According to [14] and [15], when designing questions, the level of the question should be based on the complexity of the cognitive processing required to answer the question. For example, factual questions, requiring the lowest level of processing, require students to recall relevant information from the text; paraphrased questions require students to recall specific concepts and express them in their own way; interpretive questions require students to search for and deduce a relationship among concepts that are not explicitly stated in the text; and evaluative questions, requiring the highest level of processing, require students to analyze and evaluate a concept in the text by using the context and their prior knowledge.

Questions can not only reflect the level of comprehension but also promote thinking. If higher-level questions are posed, students are more likely to think beyond the surface of the topic [16]. For example, even if a student can answer factual questions correctly, they do not necessarily gain insight from the facts. If the teacher then asks the student to paraphrase or interpret a concept, which would indicate whether the student can link facts together, the student is likely to demonstrate higher-level competency [16].

In recent years, the OECD’s Programme for International Student Assessment reading comprehension standards [17] have increasingly emphasized the role of the reader’s personal reflection in reading comprehension. However, irrespective of whether the questions require the students to organize information from texts, use their prior knowledge, or reflect on their life experiences, students must respond in accordance with the context of the text. In other words, they should not express their opinions and feelings freely as they wish. If making deductions from a text is the main competency to be assessed, the level of students’ comprehension can be determined by evaluating their selection of original sources while expressing their thoughts. Moreover, if students are asked to cite original sources, they are more likely to avoid straying from the topic and to demonstrate critical thinking [9].

To create a good questioning practice, teachers must consider the different levels of the various students and provide assistance accordingly. The different types of questions represent different levels of reading comprehension. Higher-order questions involve more complex cognitive strategies than strategic lower-order questions. Reference [18] stated that for students who have trouble in constructing meaning from a text, teachers should provide a supporting task, such as word recognition. References [14] and [19] have highlighted that for students who need help answering challenging questions, teachers should encourage more advanced use of thinking skills, such as metacognition and awareness of thinking.

The instant feedback that a teacher can provide on the basis of a student’s reply cannot be easily replaced by a predetermined instructional framework. Instead of replacing face-to-face instruction in a class, the system aims to solve the problems encountered during oral question-and-answer sessions and to provide teachers with students’ learning information to enable further counseling. Because identifying how students make inferences from texts is difficult for a teacher during oral communication, a recording mechanism is needed to help the teacher note the source of a student’s inference. According to [20], even if a teacher is well prepared, poor oral presentation skills can affect students’ understanding of questions. Therefore, a digital tool that fully implements a teacher’s questioning framework can be used to prevent misunderstanding. According to [21], some students fail to take the opportunity to practice because they feel reluctant to express themselves in public; thus, an individual-oriented learning system can ensure that every student practices an equal amount.

By summarizing the aspects that needed to be considered in the design of questions and instructions, the main guidelines of this system were defined as follows. The question design should support true/false questions, multiple-choice questions, and essay questions for different levels of students. The mechanism of replying to a question should support self-expression and connection with corresponding resources. The system must provide a basic mechanism for determining students’ level of reading comprehension from their qualitative reply and guide them to reread the text for self-modification and self-monitoring.

C. Application of a Chatbot

The earliest chatbot—ELISA, developed by Weizenbaum in 1966—used algorithmic processing and predefined response content to interact with humans [22]. Chatbots are commonly used to assist individuals in completing specific tasks, and the dialogues are designed to be purposeful and guided [23].

Recently, chatbots have been widely applied in educational settings and have been demonstrated to have beneficial effects on learning. For example, in [24] and [25], chatbots were applied to language learning and determined to induce interest and motivation in learning and increase students’ willingness to express themselves. The results of one study [26], in which chatbots were applied to computer education revealed that students who learned in the chatbot-based learning environment performed comparably to those who learned through traditional methods. Moreover, [27] recently developed a chatbot framework by using natural language processing (NLP) to generate appropriate responses to inputs. They used NLP to distinguish and label students’ learning difficulties, connect students with the corresponding grade-level learning subjects, and quickly search for learning content that met the students’ needs. Other scholars [28] applied a chatbot to the learning management system of a university and employed artificial intelligence to analyze the factors that motivate students to learn actively, monitor academic performance, and provide academic advice. The results indicated that the method improved student participation in their course.

Many commonly used communication platforms and free digital resources now support the development of chatbots. Designing and maintaining a system of teaching aids would be time-consuming. Chatbots already have high usability and are accepted by the public, meaning that using an existing platform to develop a chatbot would reduce users’ cognitive load during the learning process. Therefore, this study developed algorithms to link the services of two open source platforms, Google and Line, and create a cloud spreadsheet that can act as a database for storing teaching content and learning records. Because the algorithms connect with a spreadsheet, creating a new chatbot learning system by using the proposed approach is easy; the spreadsheet would be duplicated, and the setting would be updated with information on the new chatbot.

II. DESIGN OF SYSTEM

A. Instructional Flow Design

1) Structure

A piece of text contains several propositions, and the propositions may be parallel or subordinate to a large proposition. Therefore, the structure of textual analysis and the teaching structure are hierarchical. The proposed system has three levels: the text, chapter, and content levels (Fig. 1). Each card in a carousel template represents one text, and having multiple texts is acceptable (Fig. 2). Chatbot designers can update the chatbot interface and carousel template on the basis of their teaching structure once they have added a new text in Google Sheets (Fig. 2). Students can select any text they want to learn from at any time because of a menu button, called “Classes for Guided Reading of Texts,” which prompts the carousel template to pop up (Fig. 3). Each chapter has its own ID number, and the system connects the chapter’s learning content by the ID. For example, the ID of “Characteristic” is “01”; thus, if students press the button showing “Characteristic”, the system searches for the teaching content labeled “010000” for publishing on the chatbot and then moves to the next content in accordance with the next ID assigned by the designer (Fig. 4).

Fig. 1. Teaching structure (for a sample text).

Fig. 2. Carousel template.

Fig. 3. Rich menu.

Fig. 4. Teaching content.

2) Instructional Content Design

According to Kintsch’s theory, instructions should assist students on the basis of the level at which they fail to arrive at a correct understanding of the text. In the surface level, instructions should provide word explanations. In the textbase level, instructions should help connect propositions that the students have ignored. In the situation level, the system should guide students in expressing a concept in their own way and in accordance with their experience. In some cases, the coherence between instruction contents that are not distinct is strong. Therefore, the teacher’s instructional flow can be designed as a linear structure or created with branches and flexibility to help guide students to the content at an appropriate level depending on whether the student knows specific concepts.

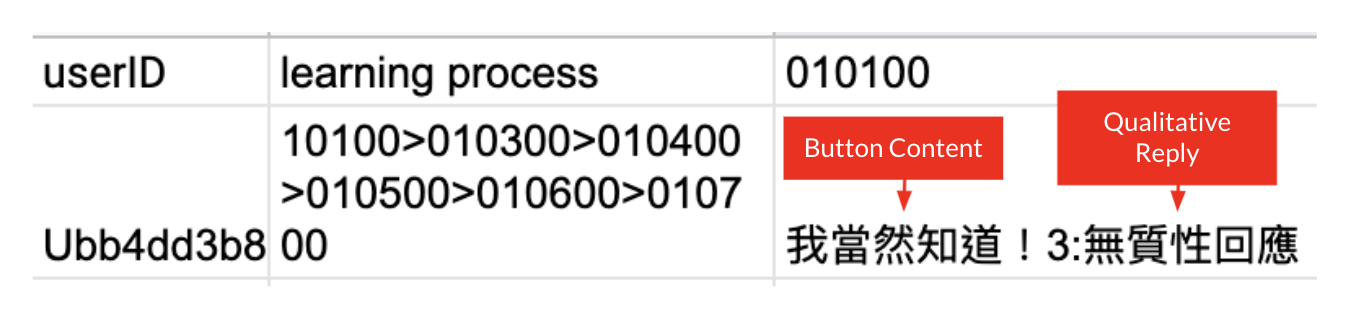

Teaching content that comprises an instructional flow is coded. The content in question form can be used to create a branch for the instructional flow. Each question can accept up to 13 branches. To arrange the next content to be published, the system requires the teacher to assign IDs to the branches of each question. According to multitext reading theory, at the integrated level, instructions should guide students to construct a global understanding of the texts. Therefore, each content ID is generated uniquely so that the next ID to be assigned is not limited to the range of texts currently being learned. For paraphrased questions that require students to respond in their own way and when no answer accurately represents a student’s thoughts, the system allows the student to reply by typing out a response if the next ID is set to “000000” (Fig. 4). The system stores the student’s learning progress by recording the order in which the student encountered the content, the buttons they pressed, and their replies (Fig. 5).

For both multiple-choice and paraphrased questions, the system asks the student to provide their qualitative reasoning and original sources; their responses enabled us to understand how students interpret texts (details in section II-B-5). In the case of a student’s thought not being represented by any answer, the student’s qualitative reply is treated as an exceptional case not considered by the teacher during the design stage, and all such replies are collected and given to the teacher.

Fig. 5. Learning record.

B. Design of Question and Answer Mechanism

1) Questioning Mechanism

Whether the students answer a question correctly does not reflect whether they fully understand a text. Examining the process of students’ interpretation can be a way to accurately follow their real thinking. According to Kintsch’s construction–integration model, a text is a combination of multiple propositions. Similarly, a reading comprehension question must be answered by combining different propositions. Therefore, by comparing the combinations of propositions used by the teacher and the students, it can be determined whether students have overlooked specific aspects, and appropriate guiding questions can then be provided to help the students review the text.

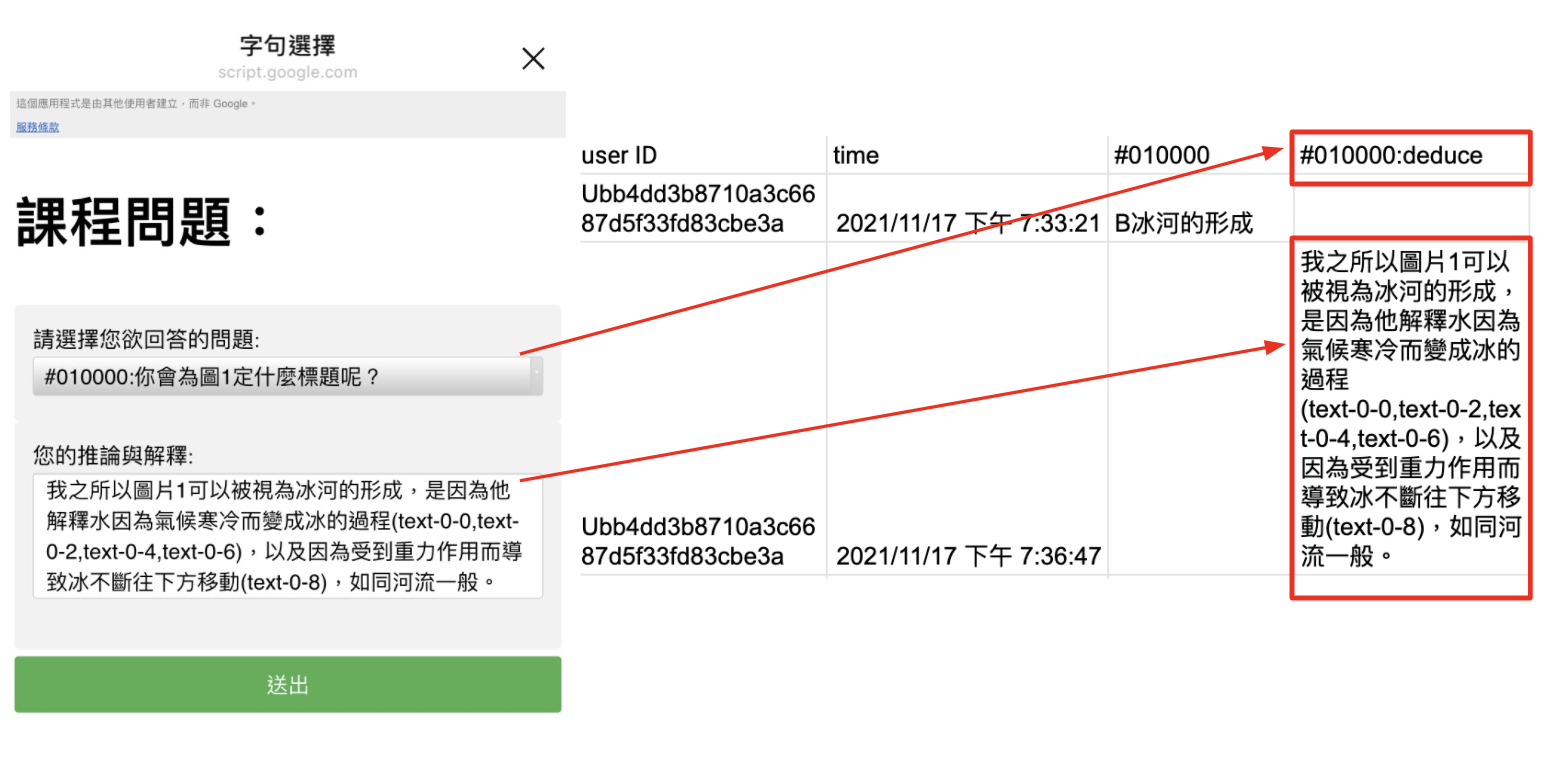

2) Text Processing

To help the teacher more effectively identify the connection between student responses and the text, the system cuts and codes the text provided by teachers by using the punctuation mark as a breakpoint. The system then creates a webpage on the basis of these sentence units and gives students the link to the webpage in a chatbot dialog (Fig. 6). The webpage has a guide that helps students reply, explain their reasoning, and pick sentences as resources to support their viewpoint (Fig. 7). The webpage is connected to the Line Login service; thus, the user’s identity is recognized and students’ replies are recorded and sent back to the same Google Sheet for the chatbot system and another subsheet for storage (Fig. 8)

Fig. 6. Chatbot dialog when answering a question.

Fig. 7. The webpage.

Fig. 8. Record of the reply in Google Sheets.

3) Sentence Marker

When a teacher designs questions, they usually have a reference answer in mind and need to refer to specific information and propositions from the text for support, interpretation, and inference. Therefore, teachers are asked to provide reference answers with the corresponding textual sources when designing questions. Similarly, students must select corresponding textual sentences as the basis for their interpretations. According to multitext reading theory, at the intertext level, sourcing across texts is one of the main competencies that must be developed and evaluated if each sentence is to be coded uniquely. Students can pick sentences across texts.

4) Sentence Match

To calculate the similarity between a student’s answer and the reference answer provided by the teacher, the system compares the references of both. On the basis of the difference between the references, the system can distinguish and label the completeness of the student’s comprehension and provide a guiding question with which the student can review the text.

5) Qualitative Replies Classification and Analysis

Because the learning patterns of a group of students are unknown at the beginning of a course, the teacher should track students’ learning process in the long term and observe how students’ explanations and sentence selection evolve under the influence of the guiding questions provided by the system. Before analysis, if a user’s replies include multiple-choice selections and qualitative explanations with supporting sentences, the replies are classified into correct and incorrect. If a user’s replies are paraphrased replies rather than multiple-choice selections, their correctness is determined manually because the system is not yet capable of automatically determining correctness. Another area of analysis in which we are interested is comparing how different students interpret a given question; thus, we plan to classify qualitative explanations on the basis of sentence IDs.

III. SUMMARY AND FUTURE RESEARCH

The integration of technology into teaching requires consideration of many aspects, such as the teacher’s attitude, teacher’s technological knowledge and ability, and teaching needs, which are often overlooked. Because we believe that tools should be useful, not just usable, this study aimed to develop a teacher-friendly teaching-aid system based on theories of the teaching and learning of reading and empirical studies of technology applications.

Thanks to the advancement of technology and the willingness of each platform to release development permission, we were able to link Google, Line, and web services by using algorithmic mechanisms. The advantage of this integration is that we do not need to spend considerable time and money to develop a system but use the existing advantages and convenience of these platforms to achieve a similar experience. Moreover, as system developers, we are able to focus on the development and implementation of pedagogical theories rather than the basic operation and maintenance of the system.

To investigate the usability of the system and to help us improve the system, we will invite students and teachers as participants. This system is a prototype. Some message types follow a Line template, and thus, there are limitations, such as the number of buttons, length of the content, and appearance of the message. In addition, in the Google Sheet employed in this study, restrictions and drop-down lists cannot be implemented to prevent designers from constructing learning content with an incorrect format. Therefore, many functions need to be implemented and improved to make the system more accessible for designers. Moreover, because students’ data stored in Google Sheets cannot currently be read easily, the data must be organized; we expect to take the same Google Sheet format as the basis for developing another chatbot with which teachers can produce statistical analyses of students’ learning records.

The system is expected to be a tool that can help teachers understand how students make interpretations and inferences when reading a text. Especially for students who cannot obtain the correct understanding, the relationship between their explanations and text sentences can help teachers to counsel such students or help researchers analyze the factors causing misunderstanding. In the future, we expect to apply machine learning models to further distinguish and label students’ reading difficulties.

References

[1] J. Fu, “Complexity of ICT in education: A critical literature review and its implications,” International Journal of education and Development using ICT, vol. 9, no. 1, pp. 112-125, 2013.

[2] P. A. Ertmer, A. T. Ottenbreit-Leftwich, O. Sadik, E. Sendurur, and P. Sendurur, “Teacher beliefs and technology integration practices: A critical relationship,” Computers & education, vol. 59, no. 2, pp. 423-435, 2012, DOI: 10.1016/j.compedu.2012.02.001

[3] F. A. Inan and D. L. Lowther, “Factors affecting technology integration in K-12 classrooms: A path model,” Educational technology research and development, vol. 58, no. 2, pp. 137-154, 2010, DOI: 10.1007/s11423-009-9132-y

[4] W. Kintsch and C. Walter Kintsch, Comprehension: A paradigm for cognition. Cambridge: Cambridge university press, 1998.

[5] M. A. Britt, C. A. Perfetti, R. Sandak, and J.-F. Rouet, “Content integration and source separation in learning from multiple texts,” in Narrative comprehension, causality, and coherence: Essays in honor of Tom Trabasso. Mahwah, NJ: Lawrence Erlbaum Associates, 1999, pp. 209-233.

[6] T. H. Nelson, “Complex information processing: a file structure for the complex, the changing and the indeterminate,” in Proceedings of the 1965 20th national conference, 1965, pp. 84-100, DOI: 10.1145/800197.806036

[7] T. A. Van Dijk and W. Kintsch, Strategies of discourse comprehension, New York: Academic Press, 1983.

[8] S. R. Goldman et al., “Disciplinary literacies and learning to read for understanding: A conceptual framework for disciplinary literacy,” Educational Psychologist, vol. 51, no. 2, pp. 219-246, 2016, DOI: 10.1080/00461520.2016.1168741

[9] Ø. Anmarkrud, I. Bråten, and H. I. Strømsø, “Multiple-documents literacy: Strategic processing, source awareness, and argumentation when reading multiple conflicting documents,” Learning and Individual Differences, vol. 30, pp. 64-76, 2014, DOI: 10.1016/j.lindif.2013.01.007

[10] M. A. Britt and J.-F. Rouet, “Learning with multiple documents: Component skills and their acquisition,” in Enhancing the quality of learning: Dispositions, instruction, and learning processes, J. R. Kirby and M. J. Lawson, Eds. Cambridge: Cambridge University Press, 2012, pp. 276-314.

[11] T. J. Berners-Lee and R. Cailliau, “Worldwideweb: Proposal for a hypertext project,” CERN European Laboratory for Particle Physics, Nov. 1990.

[12] M. Li et al., “Promoting reading comprehension and critical–analytic thinking: A comparison of three approaches with fourth and fifth graders,” Contemporary Educational Psychology, vol. 46, pp. 101-115, 2016, DOI: 10.1016/j.cedpsych.2016.05.002

[13] W. J. Therrien and C. Hughes, “Comparison of Repeated Reading and Question Generation on Students’ Reading Fluency and Comprehension,” Learning disabilities: A contemporary journal, vol. 6, no. 1, pp. 1-16, 2008.

[14] P. Afflerbach and B.-Y. Cho, “Identifying and describing constructively responsive comprehension strategies in new and traditional forms of reading,” Handbook of research on reading comprehension. New York: Routledge, 2009, pp. 69-90.

[15] T. Andre, “Does answering higher-level questions while reading facilitate productive learning?,” Review of Educational Research, vol. 49, no. 2, pp. 280-318, 1979, DOI: 0.3102/00346543049002280

[16] S. Degener and J. Berne, “Complex questions promote complex thinking,” The Reading Teacher, vol. 70, no. 5, pp. 595-599, 2017, DOI: 10.1002/trtr.1535

[17] OECD. PISA 2018 assessment and analytical framework, Paris: OECD Publishing, 2019.

[18] B. M. Taylor, P. D. Pearson, K. F. Clark, and S. Walpole, “Beating the Odds in Teaching All Children To Read,” Center for the Improvement of Early Reading Achievement, University of Michigan, Ann Arbor, Michigan, CIERA-R-2-006, 1999.

[18] B. M. Taylor, P. D. Pearson, D. S. Peterson, and M. C. Rodriguez, “Reading growth in high-poverty classrooms: The influence of teacher practices that encourage cognitive engagement in literacy learning,” The Elementary School Journal, vol. 104, no. 1, pp. 3-28, 2003, DOI: 10.1086/499740

[19] B. M. Taylor, P. D. Pearson, D. S. Peterson, and M. C. Rodriguez, “Reading growth in high-poverty classrooms: The influence of teacher practices that encourage cognitive engagement in literacy learning,” The Elementary School Journal, vol. 104, no. 1, pp. 3-28, 2003, DOI: 10.1086/499740

[20] E. A. O’connor and M. C. Fish, “Differences in the Classroom Systems of Expert and Novice Teachers,” in the meetings of the American Educational Research Association, 1998.

[21] D. Linvill, “Student interest and engagement in the classroom: Relationships with student personality and developmental variables,” Southern Communication Journal, vol. 79, no. 3, pp. 201-214, 2014, DOI: 10.1080/1041794X.2014.884156

[22] G. Davenport and B. Bradley, “The care and feeding of users,” IEEE multimedia, vol. 4, no. 1, pp. 8-11, 1997, DOI: 10.1109/93.580390

[23] A. Rastogi, X. Zang, S. Sunkara, R. Gupta, and P. Khaitan, “Towards scalable multi-domain conversational agents: The schema-guided dialogue dataset,” in Proceedings of the AAAI Conference on Artificial Intelligence, 2020, vol. 34, no. 05, pp. 8689-8696, DOI: 10.1609/aaai.v34i05.6394

[24] L. Fryer and R. Carpenter, “Bots as language learning tools,” Language Learning & Technology, vol. 10, no. 3, pp. 8-14, 2006, DOI: 10125/44068

[25] L. K. Fryer, K. Nakao, and A. Thompson, “Chatbot learning partners: Connecting learning experiences, interest and competence,” Computers in Human Behavior, vol. 93, pp. 279-289, 2019, DOI: 10.1016/j.chb.2018.12.023

[26] J. Yin, T.-T. Goh, B. Yang, and Y. Xiaobin, “Conversation technology with micro-learning: The impact of chatbot-based learning on students’ learning motivation and performance,” Journal of Educational Computing Research, vol. 59, no. 1, pp. 154-177, 2021, DOI: 10.1177/0735633120952067

[27] P. Tracey, M. Saraee, and C. Hughes, “Applying NLP to build a cold reading chatbot,” in 2021 International Symposium on Electrical, Electronics and Information Engineering, 2021, pp. 77-80, DOI: 10.1145/3459104.3459119

[28] W. Villegas-Ch, A. Arias-Navarrete, and X. Palacios-Pacheco, “Proposal of an Architecture for the Integration of a Chatbot with Artificial Intelligence in a Smart Campus for the Improvement of Learning,” Sustainability, vol. 12, no. 4, p. 1500, 2020, DOI: 10.3390/su12041500

This research project is grateful for the support of Taiwan Ministry of Science and Technology (MOST 110-2410-H-007-059-MY2.).

Authors

Wen-Hsiu Wu

received her B.S. and M.S in Physics from National Tsing Hua University (NTHU, Taiwan, R.O.C.). She is currently continuing her research as a graduate student in the Institute of Learning Sciences and Technologies at National Tsing Hua University. Her research interests include digital learning environment analysis and design, specifically designing for cross-disciplinary learning and reading comprehension.

Guan-Ze Liao

received his doctoral degree in Design programs at National Taiwan University of Science and Technology (NTUST). During his academic study at the university, he incorporated professional knowledge from various disciplines (e.g., multimedia, interaction design, visual and information design, arts and science, interactive media design, computer science, and information communication) into human interaction design, communication and media design research studies and applications. His cross-disciplinary research interests involve methods in information media research, interaction/interface design, multimedia game, and Computation on Geometric Patterns. Now he is a professor in the Institute of Learning Sciences and Technologies at National Tsing Hua University (NTHU, Taiwan, R.O.C.). His professional experience is focused in the fields of digital humanity, game-based learning, visual narrative, and information design, and the domain of design for learning.